Deep Dive into Whale-Human Communication with Artificial Intelligence

Can we talk to Whales with Artificial Intelligence?

I recently saw the show Extrapolations on AppleTV+. This futuristic series hypothesizes about the deleterious consequences of global warming as the twenty-first century progresses. In the second episode, we see a researcher having a conversation with what they believe to be the last humpback whale in the entire world. Being a Ph.D. I was immediately captivated by the idea of talking to whales. At the rate AI is progressing this is something that could happen in our lifetime. So I decided to take a deep dive.

Cetacean Brain and Intelligence

We have good reasons to believe that whales are sentient. Modern cetacean brain sizes range from 220g for the adult Franciscana dolphin to 8 Kg for the adult sperm whale, the largest brain on earth [1], while the average adult human brain weighs 1.2 to 1.4 Kg.

Does this mean that we are more intelligent than dolphins and less intelligent than whales? Not quite. Brain size is not an absolute indication of intelligence. A better approach is to look at brain size in comparison to body size. This is how Harry Jerison, an American neuroscientist in the late 1960s came up with the encephalization quotient (EQ) [2]. This metric is a ratio of the actual brain size to the expected brain size for a given body size. EQ for modern humans is 7.0, which means that the human brain is seven times larger than the average mammal of the same body. Cetaceans come in second place with an EQ that ranges between 4.0 and 5.0.

More recently, researchers have found better predictors of intelligence. For example, there are strong correlations between the number of cortical neurons and cognitive abilities [3]. A good analogy to understand this is more neurons, and more processing power. Moreover, with current technology, including microscopic tissue analysis and MRI, we have been able to analyze the cetacean brain with more precision. The resemblance to the human brain is streaking and the evidence we have collected so far points to similarities in the complexity and organization of the neocortex, including the presence of spindle cells [4] (long thought as a human-only type of cell), as well as a relatively high neuronal count compared to humans [5,6]

In addition to these neuroanatomical evolutionary similarities, cetaceans exhibit complex social behaviours enabled by vocalizations, including cooperation, forming of alliances, cooperative hunting, alloparenting (parenting of non-descendent young) and culture [8].

Engineering of data collection: from click and codas to meaning

Whale communication uses 2-second bursts of clicks in patterns known as codas. Whales use codas during socialization near the surface and at the onset of foraging expeditions but not when at depth foraging. Also, just as is the case with humans, whales in different parts of the world have different dialects. However, unlike human languages where the morphemes (units of meaning) are known, we don’t yet know whale morphemes. Moreover, we still need to identify the whale equivalent to letters and words. However, researchers are confident that as we continue collecting more data we can use machine learning to discover this latent structure in their language [9].

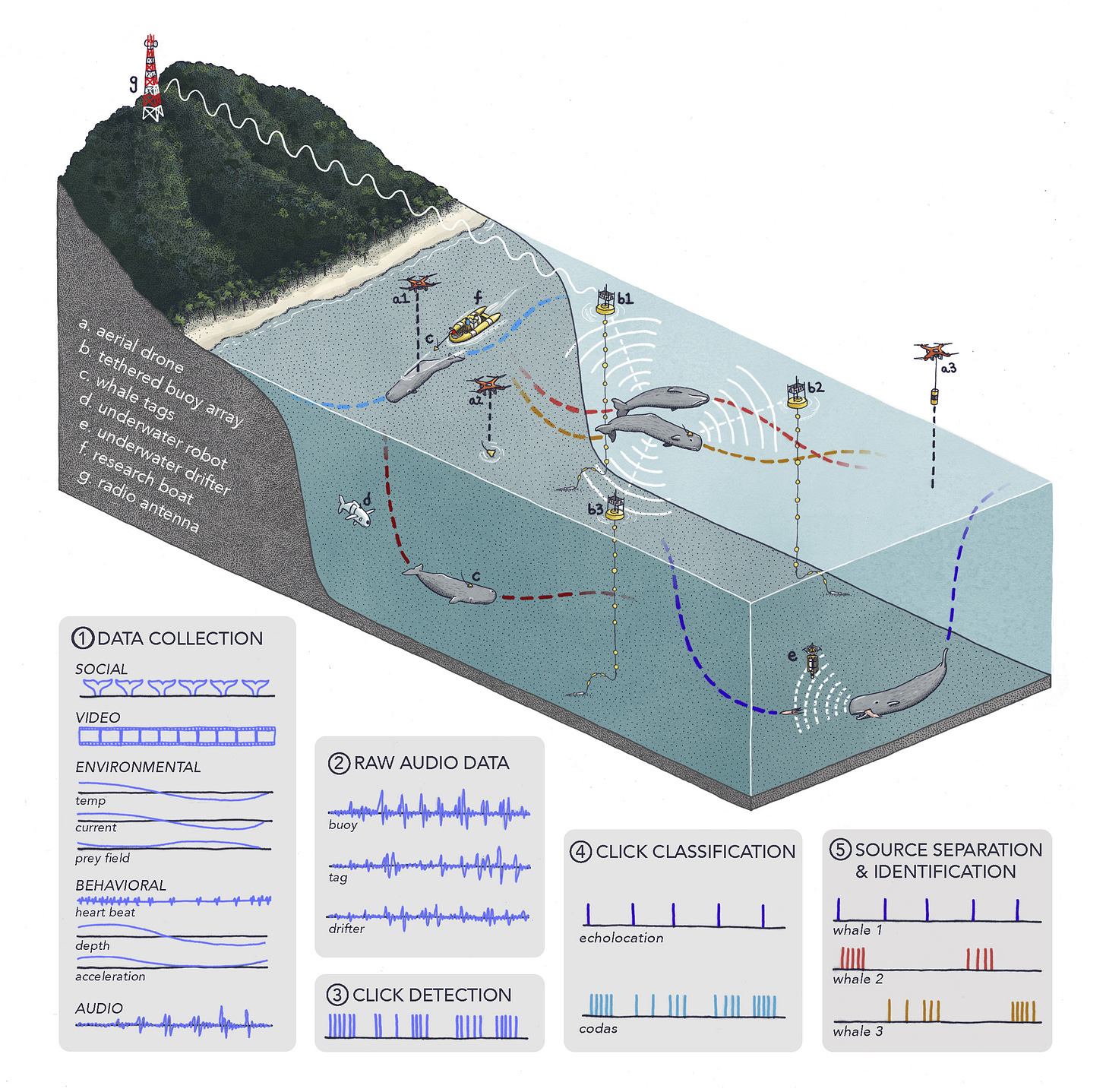

Figure 2 shows the elaborate engineering setup required to collect and process acoustic and behavioural data in whale language research. Several video cameras, aquatic and aerial drones, hydrophones (subaquatic microphones) and other electronic devices, as well as patient and dedicated researchers observing whales, are indispensable. One such team is The Audacious Project led by David Gruber at CETI (Cetacean Translation Initiative), a scientist-led nonprofit organization [10].

Machine learning: the alignment challenge

One of the key ideas that could help us enable human-to-whale communication is language embedding alignment. It works like this:

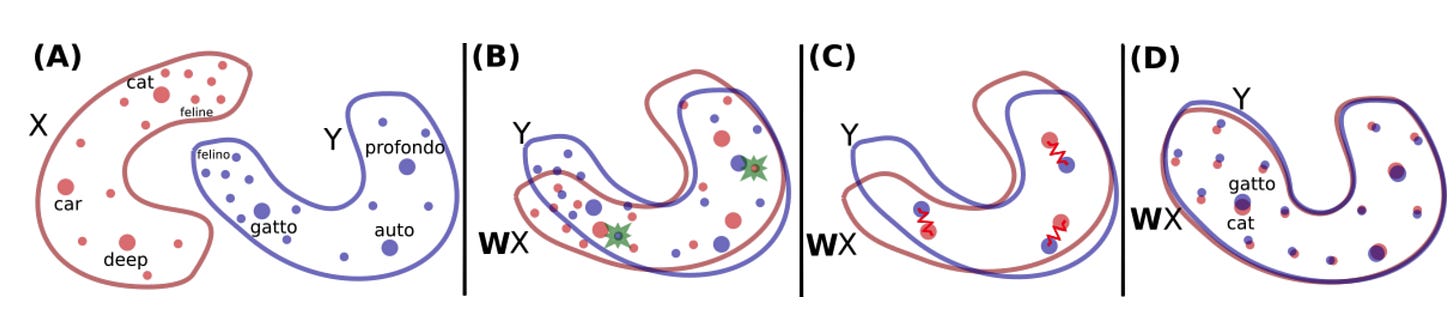

Imagine that you have a set of words and you assign a set of coordinates in space to each one in such a way that words that have similar meanings appear close (small distance) and words that are not similar are apart (large distance). This space is called an embedding.

Take now the two languages you want to translate to and from and generate their embeddings. Remember, all you need is to map semantic relationships (meaning) to distances. For example, the words cat and feline appear close for the English embedding, and similarly, the words gatto and felino will also be neighbouring vectors in the Italian embedding.

An optimization algorithm matches the two embeddings figuring out the correspondence of vectors. This can be achieved by mapping the two embeddings to a common embedding [11].

Finally, translation is possible by encoding from the source language to the common embedding, and from there, decoding to the target language.

When we know corresponding words between languages as in our English-Italian example, we can use those words to guide the matching process in a supervised manner. However, we don’t know any words in whale yet. Nonetheless, a non-supervised correspondence can be achieved by focusing on the relative distances, matching vector patterns, and more in general using the commonalities between the shape of the embeddings. For instance, researchers at Facebook published one of such methods at the ICLR conference in 2018 titled “Word translation without parallel data” [12]. This method uses adversarial learning to align the two embeddings without requiring matching pairs (Figure 3).

Codas and the future

We are actively researching codas. We know for example that they carry information about the individual, family and clan identity. We are yet to fully understand their function, variability and structure. Also, we don’t know the composition rules (grammar) or their communication protocol. Are those similar to what you would find in human languages? Researchers have observed that whales can take vocalization turns but also can chorus in synchrony or alternation. To discover both grammars and protocols researchers require more acoustic and behavioural data [9].

Perhaps we will have the first interview with a whale during our lifetime. It would be very interesting to listen to what these majestic creatures with whom we have shared the planet for eons have to say to us. What would you ask them? And more importantly, are we ready to listen? Let me know in the comments and remember to subscribe to Digital Reflections 🐋

Notes and References

[1] Spocter, M. A., Patzke, N., & Manger, P. (2017). Cetacean brains. Reference Module in Neuroscience and Biobehavioral Psychology, 1-6

[3] Dicke, U., & Roth, G. (2016). Neuronal factors determining high intelligence. Philosophical Transactions of the Royal Society B: Biological Sciences, 371(1685), 20150180

[4] Coghlan A., Whales boast the brain cells that 'make us human'. New Scientist. Online. 2006

[5] Mortensen, H. S., Pakkenberg, B., Dam, M., Dietz, R., Sonne, C., Mikkelsen, B., & Eriksen, N. (2014). Quantitative relationships in delphinid neocortex. Frontiers in neuroanatomy, 8, 132. https://doi.org/10.3389/fnana.2014.00132

[8] Warren J. Cetacean Brain Evolution. Insider Imprint. Online. 2002.

[9] Andreas, J., Beguš, G., Bronstein, M. M., Diamant, R., Delaney, D., Gero, S., ... & Wood, R. J. (2022). Towards Understanding the Communication in Sperm Whales. Iscience, 104393

[10] The Audacios Project. CETI. Online. 2023

[11] Joulin, A., Bojanowski, P., Mikolov, T., Jégou, H., & Grave, E. (2018). Loss in translation: Learning bilingual word mapping with a retrieval criterion. arXiv preprint arXiv:1804.07745.

[12] Conneau, A., Lample, G., Ranzato, M. A., Denoyer, L., & Jégou, H. (2017). Word translation without parallel data. arXiv preprint arXiv:1710.04087.